Sarath Krishnan PalliyembilOct. 29, 2024

Introduction to NLTK

In the ever-growing field of data science, one of the most fascinating and useful areas is Natural Language Processing (NLP). It enables machines to understand, interpret, and generate human language, paving the way for applications like chatbots, sentiment analysis, machine translation, and more. A tool that stands at the forefront of NLP is the Natural Language Toolkit (NLTK). This Python library is a robust and easy-to-use framework for working with human language data (text). It offers a suite of libraries and programs to help process and analyze large corpora of text, which is invaluable for data scientists who are venturing into NLP.

In this blog, we’ll dive into what NLTK is, its key features, and how it can be used for NLP tasks in data science.

NLTK, or the Natural Language Toolkit, is an open-source Python library designed for working with human language data. It provides a comprehensive suite of tools for performing text analysis, including tokenization, stemming, tagging, parsing, and machine learning for linguistic tasks. Whether you are analyzing small texts or large corpora, NLTK is one of the best go-to libraries for NLP.

NLTK was developed by Steven Bird and Edward Loper in 2001 as part of a research project to teach linguistic concepts in computational form. Today, it is widely used by data scientists, linguists, and researchers for developing NLP models and performing text analysis.

Language is one of the most abundant forms of data available today. From social media posts to customer reviews and legal documents, large amounts of unstructured text data are generated daily. NLTK allows data scientists to harness this data for meaningful insights by transforming it into a structured format. Here's why NLTK is critical for data science:

Preprocessing Text Data: The first step in any NLP task is preprocessing text, and NLTK excels at this. It allows you to tokenize, remove stopwords, and normalize text, which are essential steps to clean the raw data.

Linguistic Feature Extraction: NLTK provides tools to extract various linguistic features like parts of speech (POS), named entities, and grammatical structures, which can be used to create better models.

Handling Large Datasets: NLTK works efficiently with large corpora, making it ideal for large-scale text analysis. It includes a large collection of sample corpora for training and testing models.

Machine Learning Integration: NLTK integrates seamlessly with machine learning libraries, enabling data scientists to build and test classifiers for various NLP tasks like sentiment analysis or topic modeling.

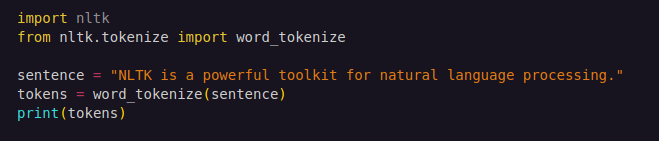

Tokenization is the process of breaking down text into smaller units such as words or sentences. NLTK provides several built-in tokenizers to split text into manageable chunks:

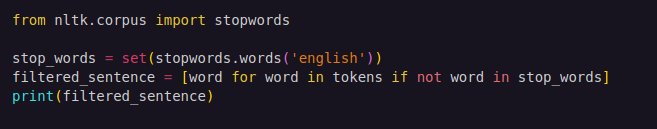

Stop words are common words such as "is", "the", and "and" that don’t carry much information and can be filtered out during analysis. NLTK has a pre-built list of stop words that can be removed:

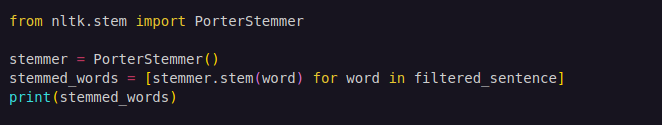

Stemming and lemmatization reduce words to their root forms. Stemming uses a crude heuristic, while lemmatization ensures the root word is valid in the language. NLTK provides functions for both:

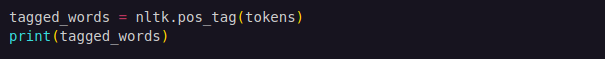

Part-of-speech tagging identifies the grammatical role of each word in a sentence, such as noun, verb, adjective, etc.:

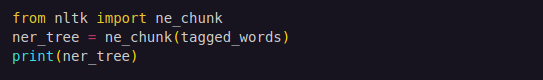

NER classifies named entities in text like people, organizations, and locations. This is particularly useful for tasks like information extraction:

One of the popular applications of NLTK in data science is sentiment analysis. By classifying texts into positive, negative, or neutral sentiments, businesses can analyze customer feedback, social media posts, and product reviews.

Using NLTK, data scientists can classify texts into predefined categories such as spam detection in emails, topic classification in articles, or language identification.

With large datasets, topic modeling allows for automatic discovery of abstract topics within a text corpus. NLTK can be used in conjunction with libraries like Gensim to perform topic modeling.

NLTK’s integration with machine learning algorithms allows for the creation of translation systems by training models on parallel corpora in different languages.

While NLTK is a powerful tool, it does come with some challenges:

Speed: NLTK can be slow for processing large datasets compared to more recent libraries like spaCy.

Learning Curve: Although user-friendly, NLTK requires a solid understanding of NLP concepts, making it somewhat challenging for beginners.

Not State-of-the-Art: Newer models and frameworks like Hugging Face’s Transformers outperform NLTK in deep learning tasks like machine translation or question answering.

NLTK remains one of the most versatile and beginner-friendly libraries for working with text data in Python. Its wide array of tools, ease of use, and strong community support make it an ideal choice for data scientists looking to perform natural language processing tasks. Whether you're working on sentiment analysis, topic modeling, or just cleaning and preparing your text data, NLTK provides the foundational building blocks for NLP.

As the demand for text data analysis grows in the data science field, NLTK will continue to be a critical library for unlocking the value hidden in unstructured data. If you're new to NLP or data science, NLTK is a great place to start your journey!

0