Anjana BhaskaranAug. 7, 2024

In the ever-evolving world of data science, the tools and technologies available to analysts and engineers are crucial in shaping their work. One such tool that has gained considerable attention and acclaim in recent years is PyTorch. Developed by Facebook's AI Research lab, PyTorch has become a popular framework for building machine learning models and deep learning networks.

PyTorch is an open-source machine learning library based on the Torch library. It provides a robust framework for creating and training deep learning models, offering flexibility and efficiency that are essential for modern data science tasks. PyTorch is known for its dynamic computation graph, which enables users to modify the network behavior on-the-fly, making it particularly useful for research and experimentation.

PyTorch adopted a Chainer innovation called reverse-mode automatic differentiation. Essentially, it’s like a tape recorder that records completed operations and then replays backward to compute gradients. This makes PyTorch relatively simple to debug and well-adapted to certain applications such as dynamic neural networks. It’s popular for prototyping because every iteration can be different.

The core components of PyTorch are Tensors and Graphs. Lets look what they are:

Tensors

At the heart of PyTorch lie tensors — the fundamental building blocks that empower data manipulation and computation. You can think of tensors as mathematical entities, similar to arrays, that are natively compatible with GPU acceleration, enabling lightning-fast computations.

In other words tensors are a core PyTorch data type, similar to a multidimensional array, used to store and manipulate the inputs and outputs of a model, as well as the model’s parameters. Tensors are similar to NumPy’s ndarrays, except that tensors can run on GPUs to accelerate computing.

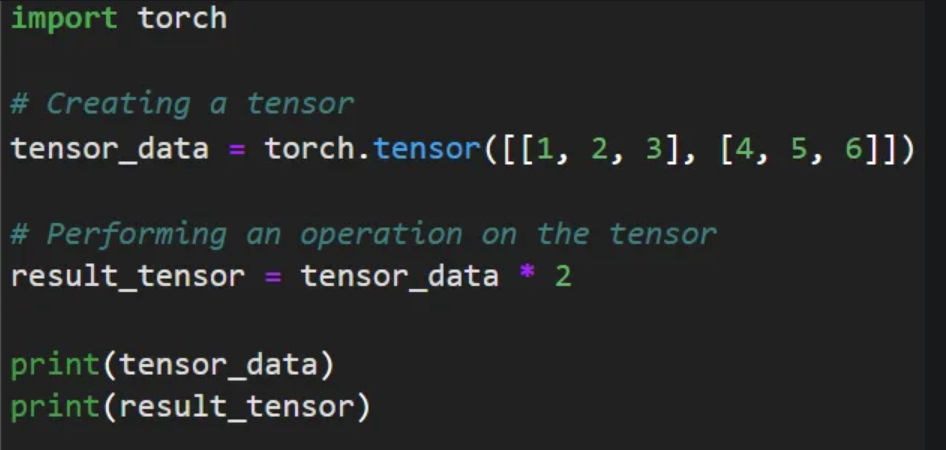

Creating a tensor in PyTorch:

Graphs

Graphs are data structures consisting of connected nodes (called vertices) and edges. Every modern framework for deep learning is based on the concept of graphs, where Neural Networks are represented as a graph structure of computations. PyTorch keeps a record of tensors and executed operations in a directed acyclic graph (DAG) consisting of Function objects. In this DAG, leaves are the input tensors, roots are the output tensors.

In many popular frameworks, including TensorFlow, the computation graph is a static object. PyTorch is based on dynamic computation graphs, where the computation graph is built and rebuilt at runtime, with the same code that performs the computations for the forward pass also creating the data structure needed for backpropagation. PyTorch is the first define-by-run deep learning framework that matches the capabilities and performance of static graph frameworks like TensorFlow, making it a good fit for everything from standard convolutional networks to recurrent neural networks.

As a conclusion PyTorch stands out as a powerful tool for data science due to its dynamic computation graphs, seamless Python integration, automatic differentiation, rich ecosystem, performance, and strong community support. Whether you’re conducting research or developing production models, PyTorch’s features and benefits make it a compelling choice for a wide range of data science applications.

0