JyothisOct. 23, 2024

In today's data-driven world, data is often referred to as the "new oil." It's a valuable resource that can be used to draw insights and make informed decisions. While there are numerous sources of data available online, manually collecting and organizing this information can be a time-consuming and tedious task.

This is where Scrapy comes into play. It's a Python-based web scraping framework that automates the process of extracting data from websites. By leveraging Scrapy's powerful features, you can efficiently gather data from various sources, including websites, APIs, and web applications.

In this blog post, we'll provide an introduction to Scrapy, exploring its key features, benefits, and how to get started with web scraping using this versatile tool.

Now let us see how to extract data from quotes.toscrape.com, a website that lists quotes from famous authors.

1. Setting Up a Scrapy Project:

Move to the directory where you want to start your project, open the terminal and enter the following lines of code to create a scrapy project.

>> scrapy startproject quotestoscrape

This will create a folder with name ‘quotestoscrape’ with essential components for your project.

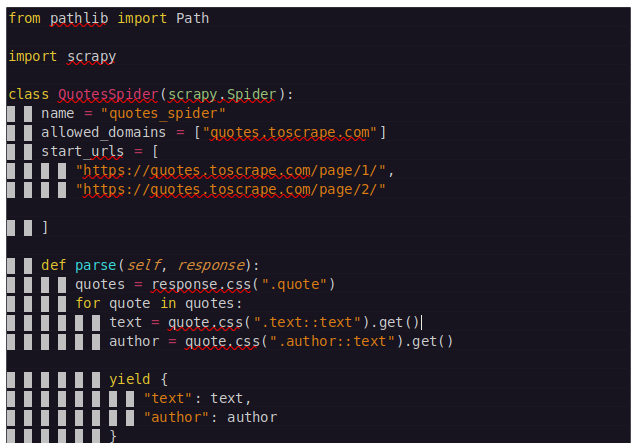

2. Define a spider:

Spiders are classes that you define and that Scrapy uses to scrape information from a website (or a group of websites). They must subclass Spider and define the initial requests to make, optionally how to follow links in the pages, and how to parse the downloaded page content to extract data.

This is the code for our first Spider. Save it in a file named quotes_spider.py under the quotestoscrape/spiders directory in your project:

3. Run the spider:

Now go to the project directory and run the following command in the terminal to run the spider and extract data from the specified URLs.

>> scrapy crawl quotes_spider

5.Export the scraped data

You can export the scraped data in various formats like CSV, JSON, and XML. Here's an example command to export the data to a CSV file.

>> scrapy crawl quotes_spider -o quotes.csv

This will create a CSV file named quotes.csv containing the extracted data.

Through the practical example of extracting quotes from quotes.toscrape.com, we demonstrated how Scrapy simplifies scraping tasks. With its intuitive approach and clear structure, Scrapy empowers you to automate data collection, saving you time and effort.

Whether you're a data analyst, researcher, or simply someone looking to harvest insights from the web, Scrapy unlocks a world of possibilities. As you delve deeper into its functionalities, you'll discover the vast potential of web scraping and unlock new avenues for data-driven exploration.

0